MaCVi 2024 Challenges have concluded. Check the results on the leaderboards. Thank you for participating!

An integral part of the workshop are the four challenges UAV-based Multi-Object Tracking with Reidentification

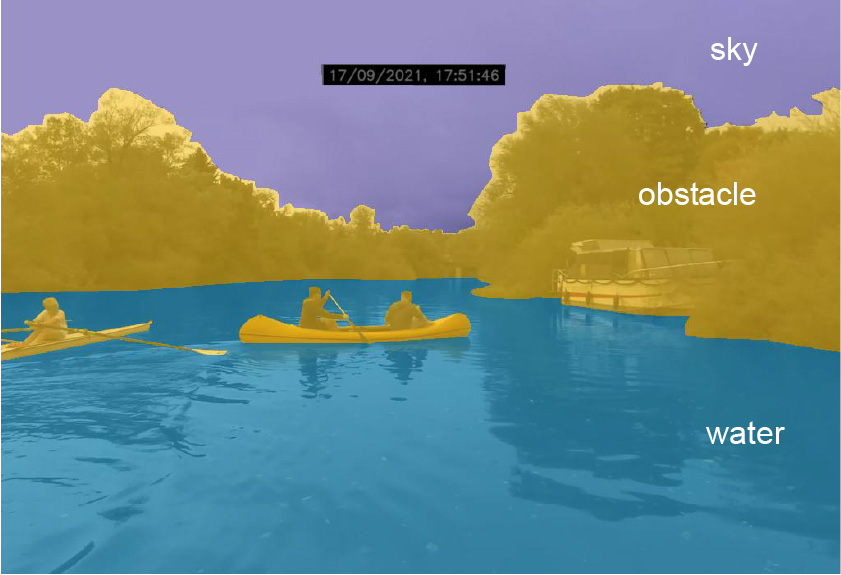

and USV-based Obstacle Detection, Segmentation and Tracking. These challenges aim to

foster the development of computer vision algorithms in SaR missions and autonomous boat navigation, respectively.

The UAV-based challenge is mainly based on

an updated version of SeaDronesSee whereas the USV-based ones are based on the LaRS benchmark and a new dataset, respectively.

This year, the focus of these challenges will be on both accuracy (algorithm performance as measured by challenge-specific evaluation

metrics), and runtime on embedded hardware. For certain challenge tracks, we require the method to run in real-time on embedded hardware.

Nevertheless, for all other challenge tracks, we require participants to submit the processing speed of their algorithms in

frames per second, and specification of the hardware used.

The best three teams of each track (some challenges have multiple tracks) will be featured as co-authors in a

challenge summary workshop proceeding

(included in IEEE Xplore) upon submitting a short technical report (max. half page)

describing their method. We reserve the right to cancel a subchallenge if less than four separate teams (no

collusion allowed) participate. Furthermore, for methods to win, we require them to beat a given baseline.

Note that only one model per team per track counts even if multiple models of the same team are within the top 3,

i.e. the worse models will be ignored in the

leaderboard (but do not hurt). You may participate and win in multiple tracks still. Also, note the

short deadline for the technical report.

Note that we (as organizers) may upload models for the challenges BUT we do not compete for a winning position

(i.e. our models do not count on the leaderboard and merely serve as references). Thus, if your method is worse (in

any metric)

than one of the organizer's (except for defined baselines), you are still encouraged to submit your method as you might win.