Maritime Computer Vision Workshop @ CVPR 2026

Challenges / Vision-to-Chart Data Association

Vision-to-Chart Data Association

Quick links:

Dataset download

Submit

Leaderboard

Ask for help

Quick Start

- Download the dataset from the dataset page .

- Train your model on the provided dataset (or use the 120-epoch checkpoint). Find starter code here .

- Evaluate it on the validation split using provided evaluation script (coming soon).

- Take note of the requirements for final submission.

Overview

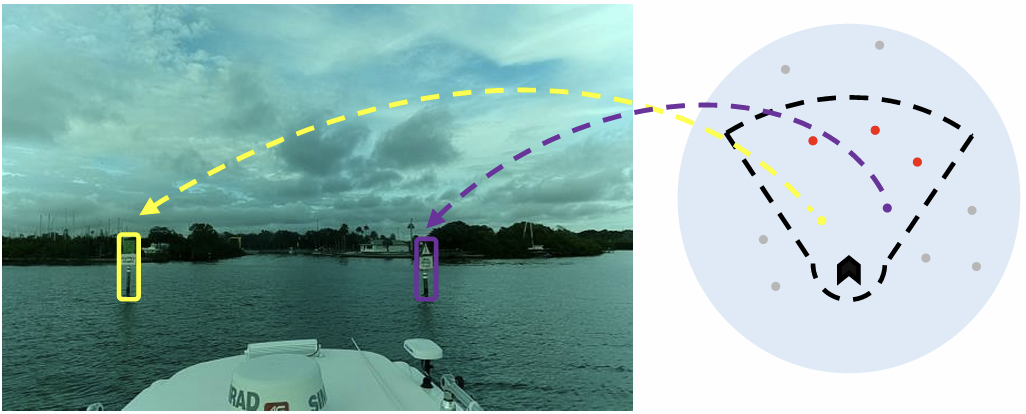

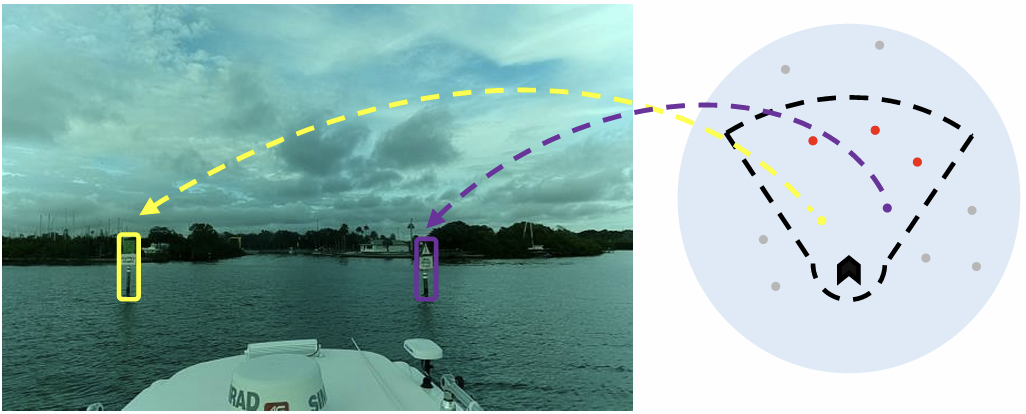

This challenge is about augmenting navigation data for maritime vehicles (boats). Given an image captured by boat-mounted camera, and chart markers of nearby navigational aids (buoys), the task is to identify visible buoys and match them with their corresponding chart markers. The goal is to maximize detected buoys as well as correct matches while minimizing localization error of detected buoys and wrong detections.

The challenge is based on the paper "Real-Time Fusion of Visual and Chart Data for Enhanced Maritime Vision".

Task

The task is to develop a deep learning model capable of detecting buoys in a monocular image, and matching them to associated chart positions.

Models will be evaluated on both detection and matching accuracy as well as localization error.

Dataset

The dataset consists of 5189 entries, with each entry containing a single RGB-based image,

a variable amount of queries, and a variable amount of labels. Each query denotes chart data of a nearby buoy,

and has the format: (query id, distance to ship [meters], bearing from ship centerline [degrees], longitude, latidude).

Each label denotes a nearby buoy visible in the image, and has the format (query id, BB center x, BB center y, BB width, BB height),

where BB location specification follows the YOLO format and denotes the corresponding queries position in the image.

Note that this dataset contains entries where no buoy is visible.

The dataset is provided in a predefined format split into training and validation sets for use with the baseline training code.

Participants are free to further manipulate or reorganize the data according to their own experimental needs.

The leaderboard evaluation will be conducted on a separate, unseen dataset that is not shared with the participants. In addition, to enable participants to experiment with their own methods,

raw IMU data corresponding to each sequence is also provided. The IMU data is not integrated into the baseline training code;

however, participants may extract and incorporate these raw measurements into their own algorithms during training if desired.

Evaluation metrics

The submitted models are evaluated on a test set that is not publicly available.

The following metrics are employed to assess model capabilities:

- Detection: Precision, Recall and F1-Score of detected buoys

- Matching: Mean IoU (mIoU) of detected buoys BB

- Overall: Decider. Mean of F1-Score and mIoU

Participate

To participate in the challenge follow these steps:

- Download the dataset from the dataset page .

- Train a deep learning model on the dataset.

- Use the provided evaluation script to get preliminary performance on the validation split

- Upload the results using upload page to be represented on the leaderboard .

- Upload at most three final submissions following the section Final Submission .

Get Started

To help you get started and provide a brief introduction to the topic, we have developed a fusion transformer based on DETR. You can find the code here and the 120-epoch pre-trained checkpoint here.

Final Submission

The final submission will be evaluated on the private test data and hence determines the final performance score of the participants. This challenges aims to provide participants with as much freedom as possible. However, to ensure a fair and streamlined evaluation, the following restrictions have to be imposed for the final submission.

- Final submissions must be submitted as a single zip file which contains the model weights, associated code and instructions to run it using the provided evaluation script.

- The Evaluation script must be modified only within the limits specified in the script.

- Submission zip file may include additional files, but total size of the zip must not exceed 1 GB.

- Additional requirements (e.g. Docker image) which would exceed this threshold can be included as a downloadable reference in the run instructions.

- The final, uncompressed project including all requirements and model weights must not exceed 5 GB.

- Participants may change their final submission at most 3 times.

- Upload your final submission here by selecting the Vision-to-Chart Data Association Final Submission track. The upload link for the leaderboard is for preliminary evaluations (on the validation split) only.

- Be sure to have only a single final submission active at once. Groups/Users with multiple final submissions will not be evaluated on the testset. If you change your submission, be sure to delete all previous submits.

- Models must be in pytorch format and must have at most 250 million parameters.

- Evaluation must work with the unmodified evaluation script (except for changes to the two clearly marked functions).

- Evaluation will be done using a Linux Ubuntu host system equipped with Nvidia A4000 GPUs. Participants must ensure suitable compatability of their code. Test runs on this hardware can be requested for up to 3 days before the end of the challenge deadline.

Terms and Conditions

- Submissions must be made before the challenge deadline listed on the dates page.

- The winner is determined by the highest score on the 'overall' metric.

- Final Submissions must follow the format specified in 'Final Submission'

If you have any questions, please join the MaCVi Support forum.