Maritime Computer Vision Workshop @ CVPR 2026

Challenges / Multimodal Semantic Segmentation Challenge

Multimodal Semantic Segmentation Challenge

Quick links:

Dataset paper

Dataset

Submit

Leaderboards

Ask for help

Overview

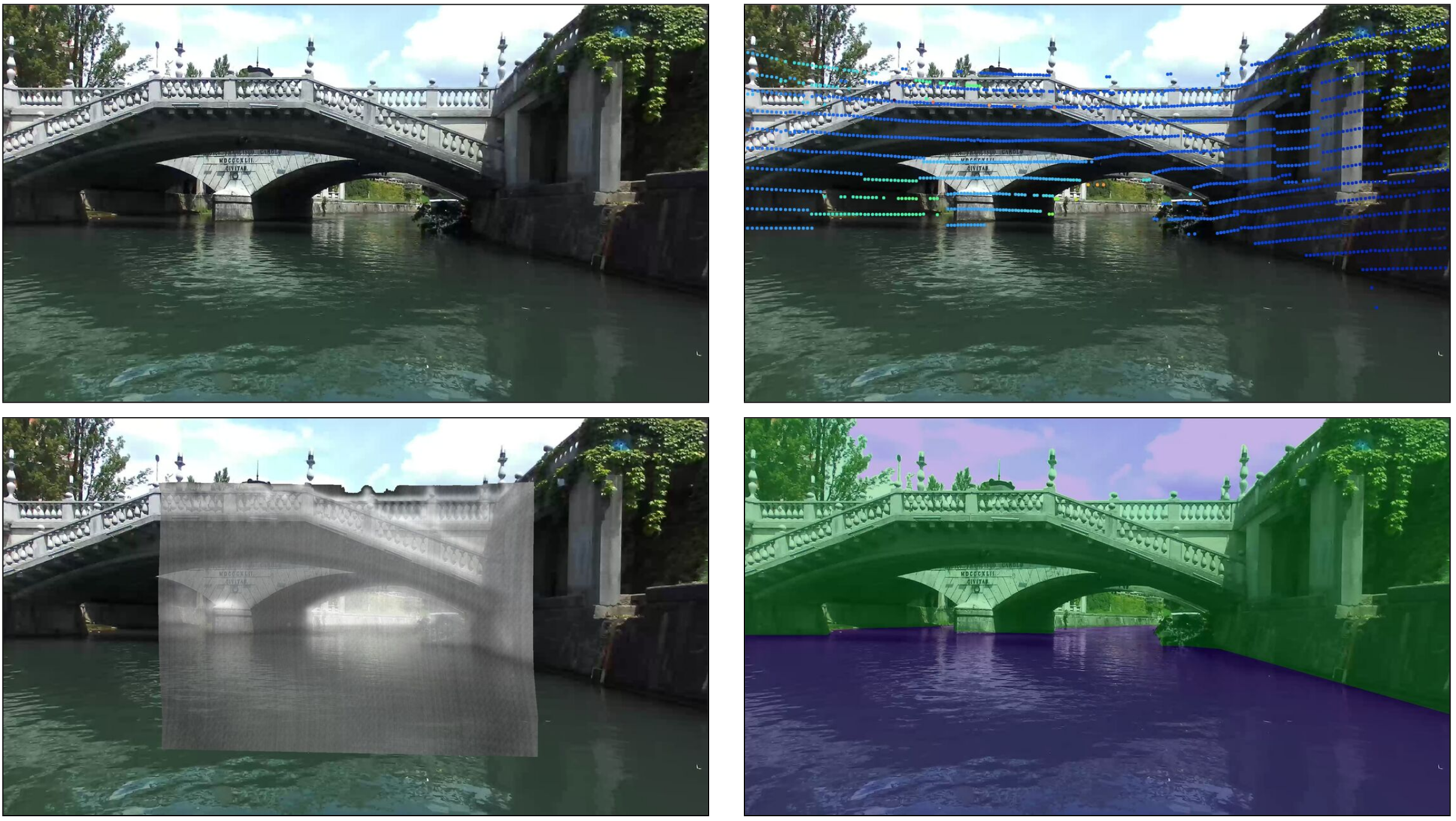

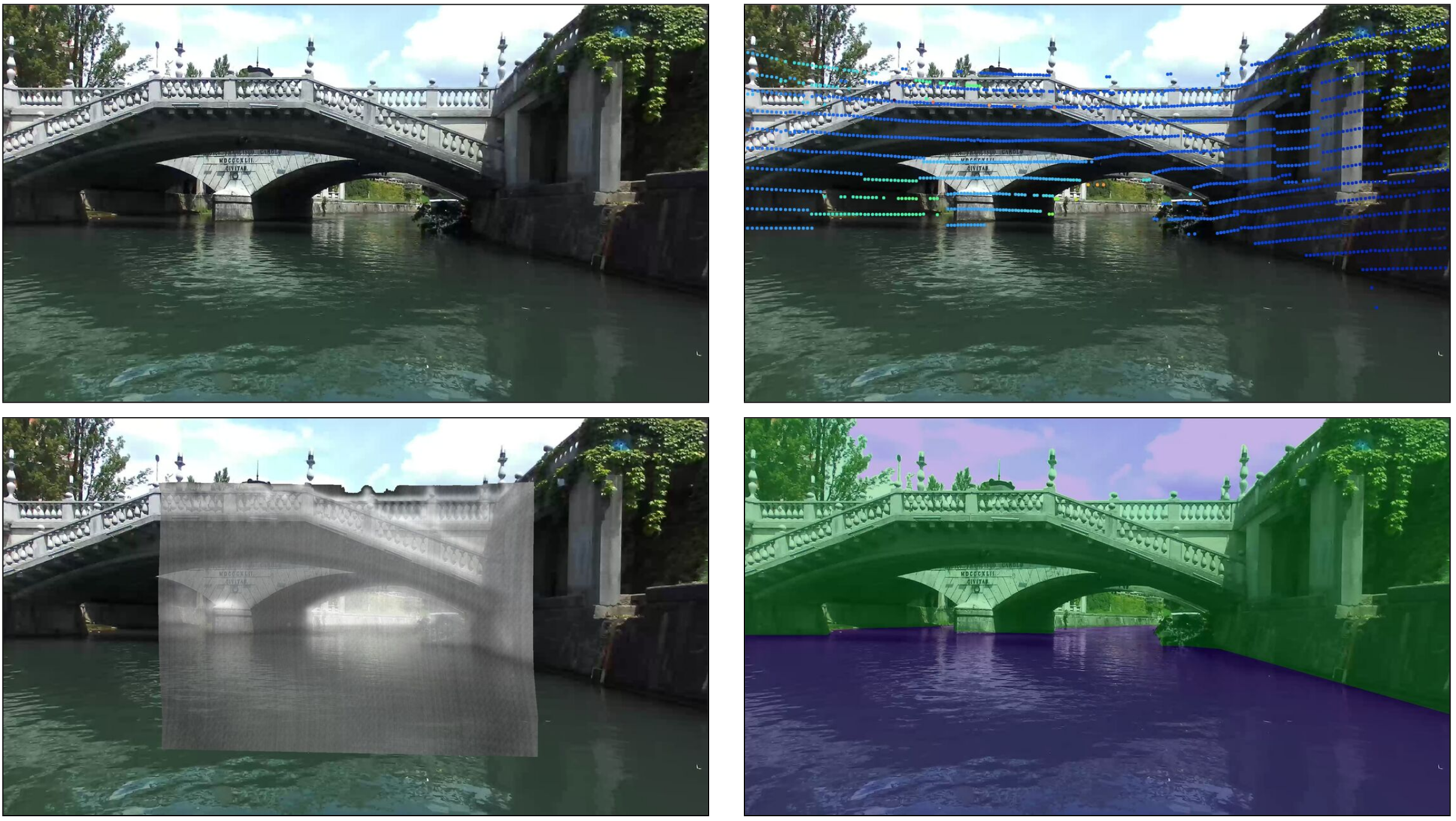

Using multiple different sensors in a unified model can enable or improve scene interpretation under difficult circumstances, such as low-light or rain.

Task

The task is to produce semantic labels for the given sensor data, where the labels are as follows:

-

static obstacle (1)

- shore, piers, buildings, bridges, etc.

-

dynamic obstacle (2)

- swimmers, rowboats, paddleboards, sailboats, buoys, etc.

- water (3)

- sky (4)

Dataset

The multimodal semantic segmentation challenge is based on the MULTIAQUA dataset, with a subset of the sensors specifically suited for low-light perception. The dataset contains synchronized and aligned data from RGB camera, LIDAR and thermal (FLIR) camera. The RGB data is structured as

[HxWx3] matrices, while the thermal images are provided as

[HxW] arrays. The lidar data is not structured upfront, but contains raw and processed values in a

numpy array. The LIDAR values provided for each point are the

X,Y,Z 3D coordinates, the distance

d to the camera, the point's reflectivity

r, and the coordinates of the point's 2D projection onto the RGB image plane

x,y. The thermal images and LIDAR points are pre-processed and projected to the image plane of the RGB images. The specific way to use the thermal and LIDAR data is not specified, the scaling, channels and pre-processing are left to the participants' discretion.

Splits

The dataset contains a training and a validation set of multimodal data, along with corresponding semantic labels. The train and validation sets were captured in daytime under different weather conditions and on different waterways. The test set was captured during nighttime and serves as a challenging use case where all three modalities must be used. The ground truth annotations for the test set are

not provided to the participants.

Participate

The models must be structured so they are able to process all three modalities, and additionally, the models

must process all three modalities.

The challenge is structured as follows: the participants receive data that was captured during daytime as well as the corresponding ground truth labels. This will serve as the training data for the challenge. Using pretrained models and other datasets is allowed, but the data used in training the model must be stated in the submission report.

The final evaluation will be performed on the MaCVi server on the nighttime dataset. The main metric of the challenge is M, which is an average of the mIoU on the validation set and the mIoU on the nighttime test set. The participants will have to upload their model's predictions on the validation and test sets, then the performance metrics will be calculated on the server and displayed on the leaderboard. In the case of a tie, the model with the highest test set mIoU wins.

Terms and Conditions

- Submissions must be made before the deadline as listed on the dates page.

Submissions made after the deadline will not count towards the final results of the challenge.

- Submissions are limited to one per day per challenge. Failed submissions do not count towards

this limit.

- The winner is determined by the M metric

- You are allowed to use additional publicly available data for training but you must disclose them at the time of

upload.

This also applies to pre-training.

- In order for your method to be considered for the winning positions and included in the results paper, you will

be required to submit a short report describing your method. More information in regards to this will be

released towards the end of the challenge.

- Note that we (as organizers) may upload models for this challenge, BUT we do not compete for a winning position

(i.e. our models do not count on the leaderboard and merely serve as references). Thus, if your method is worse

(in any metric)

than one of the organizer's, you are still encouraged to submit your method.