3rd Workshop on Maritime Computer Vision (MaCVi)

Challenge submission has concluded. Thank you for participating. Results will be revealed soon, stay tuned!

Challenges / USV-based Obstacle Segmentation

USV-based Panoptic Segmentation

Quick links:

Dataset download

Submit

Leaderboards

Ask for help

Quick Start

- Download the LaRS dataset on the dataset page.

- Train your model on the LaRS training set.

- Upload a .zip file with predictions on the upload page.

Overview

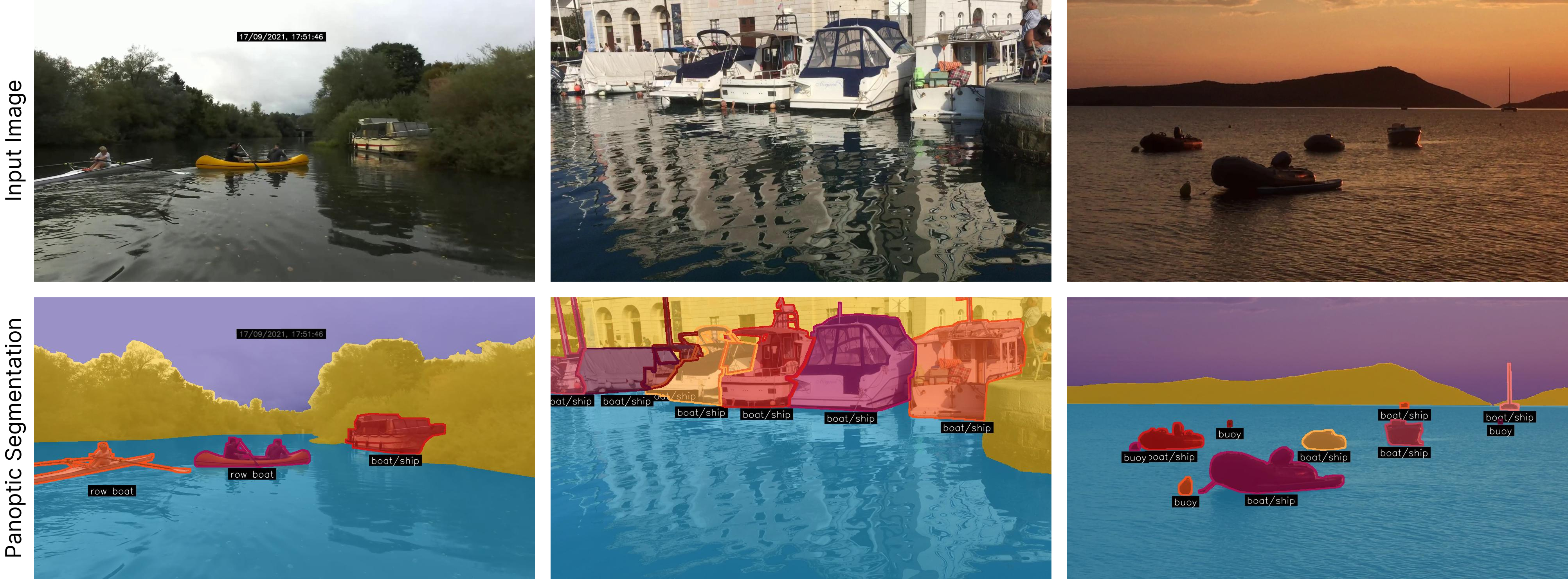

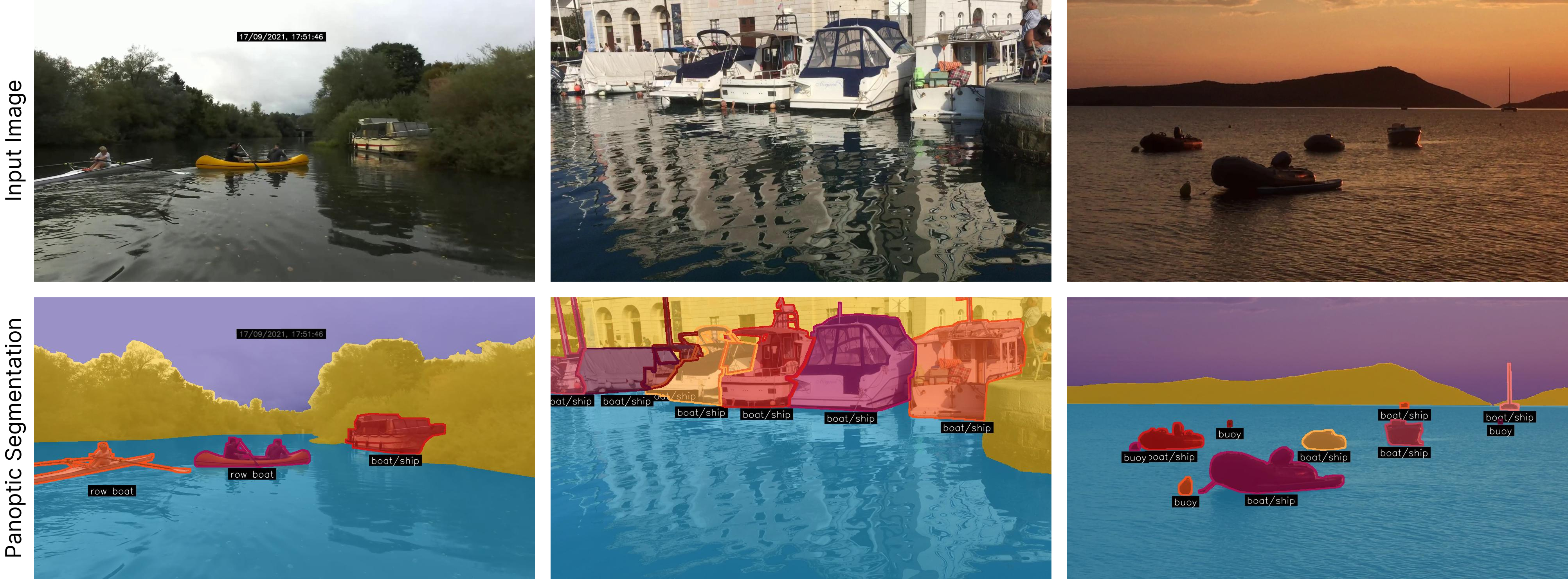

MaCVi 2025's USV challenges feature the LaRS Dataset. LaRS focuses on scene diversity and covers a wide range of environments including inline waters such as lakes,

canals and rivers.

To perform well on LaRS, one needs to build a robust model that generalizes well to various situations.

For the Panoptic Segmentation track, your task is to develop a panoptic segmentation method that parses

the input scene into several background (stuff) and foreground (things) categories. The method should segment individual

instances of the foreground classes. You may train your methods on the LaRS training set, which has been designed

specifically for this use case. You may also use additional publicly available data to train your method.

In this case, please disclose this during the submission process.

Task

Create a panoptic segmentation method that parses the input scene into several background (water, sky, static obstacles) classes

and foreground (dynamic obstacles) instances.

Dataset

LaRS consists of 4000+ USV-centric scenes captured in various aquatic domains.

It includes per-pixel panoptic masks for water, sky and different types of obstacles.

On a high level, obstacles are divided into i) dynamic obstacles, which are objects floating in the water (e.g. boats, buoys, swimmers)

and ii) static obstacles, which are all remaining obstacle regions (shoreline, piers).

Additionally, dynamic obstacles are categorized into 8 different obstacle classes:

boat/ship, row boat, buoy, float, paddle board, swimmer, animal and other. More information >

This challenge is based on the panoptic segmentation sub-track of LaRS:

the annotations include panoptic segmentation masks which denote regions in the image belonging to each segment (i.e. instance),

and an annotation JSON file containing annotations (class, etc.) for each segment in the dataset.

Evaluation metrics

We select the commonly used Panoptic Quality (PQ) as the evaluation metric for evaluating panoptic method performance on LaRS.

We also report separated PQ performance for thing and stuff classes as well as PQ reduced

into its contributing parts, recognition quality (RQ) and segmentation quality (SQ).

To determine the winner of the challenge the PQ metric (higher is better) will be used.

Furthermore, we require every participant to submit information on the speed of their method measured in frames per

second (FPS). Please also indicate the hardware that you used for benchmarking the speed. Lastly, you should indicate which data sets (also for

pretraining) you used during training.

Participate

To participate in the challenge follow these steps:

- Download the LaRS dataset ( LaRS webpage).

- Train a panoptic segmentation model on the LaRS training set. You can

also use additional publicly available training data, but must disclose it during submission.

- Generate panoptic predictions on the LaRS test images. Your method should produce panoptic instance masks

for stuff and thing classes. The predictions should be saved in a single .png file

(example) with the same name as the

original image.

The .png file should follow the format of the ground-truth panoptic masks:

- RGB values encode class and instance information:

- R encodes the predicted class ID

- G and B encode the predicted instance ID (

instance_id = G * 256 + B)

- Class IDs follow the same class IDs specified in the GT annotation file. These are:

0: VOID1: [stuff] static obstacles3: [stuff] water5: [stuff] sky11: [thing] boat/ship12: [thing] row boat13: [thing] paddle board14: [thing] buoy15: [thing] swimmer16: [thing] animal17: [thing] float19: [thing] other

- The order and values of instance IDs is not important as long as each instance is assigned a unique ID

- A common practice is to set the instance IDs of stuff classes to 0

- Create a submission .zip archive with your predictions.

- The prediction .png files should be placed directly in the root of the .zip file (no extra directories).

- Refer to the example submission file for additional information ( lars_pan_example.zip).

- Upload your .zip file along with all the required information here. You need to

register in order to submit your results.

- After submission, your results will be evaluated on the server. This may take 10+ minutes. Please refresh

the dashboard page to see results. The dashboard will also display potential errors in case of failed

submissions (hover over the error icon). You may evaluate at most one submission per day (per challenge track).

Failed attempts do not count towards this limit.

Terms and Conditions

- Submissions must be made before the deadline as listed on the dates page.

Submissions made after the deadline will not count towards the final results of the challenge.

- Submissions are limited to one per day per challenge. Failed submissions do not count towards

this limit.

- The winner is determined by the PQ metric

- You are allowed to use additional publicly available data for training but you must disclose them at the time of

upload.

This also applies to pre-training.

- In order for your method to be considered for the winning positions and included in the results paper, you will

be required to submit a short report describing your method. More information in regards to this will be

released towards the end of the challenge.

- Note that we (as organizers) may upload models for this challenge, BUT we do not compete for a winning position

(i.e. our models do not count on the leaderboard and merely serve as references). Thus, if your method is worse

(in any metric)

than one of the organizer's, you are still encouraged to submit your method. Methods that were submitted as part

of the MaCVi 2024 challenge will be marked on the leaderboards.

In case of any questions regarding the challenge datasets or submission, please join the

MaCVi Support forum.