Challenges / Approximate Supervised Object Distance Estimation

Quick links: Dataset download Submit Leaderboards Ask for help

The top team(s) of this challenge will win prizes sponsored by Shield AI. Details of the prizes will be worked out soon.

This challenge focuses on developing algorithms for approximate supervised object distance estimation using monocular images captured from USVs. Participants are tasked with creating models that estimate the distance of navigational aids such as buoys, using only visual cues. The goal is to maximize accuracy of the object detection and distance estimation tasks while ensuring real-time operation.

The task is to develop a deep learning model capable of detecting objects and predicting their distances from a USV using monocular images. Models will be evaluated on both detection accuracy and distance estimation under varying environmental conditions.

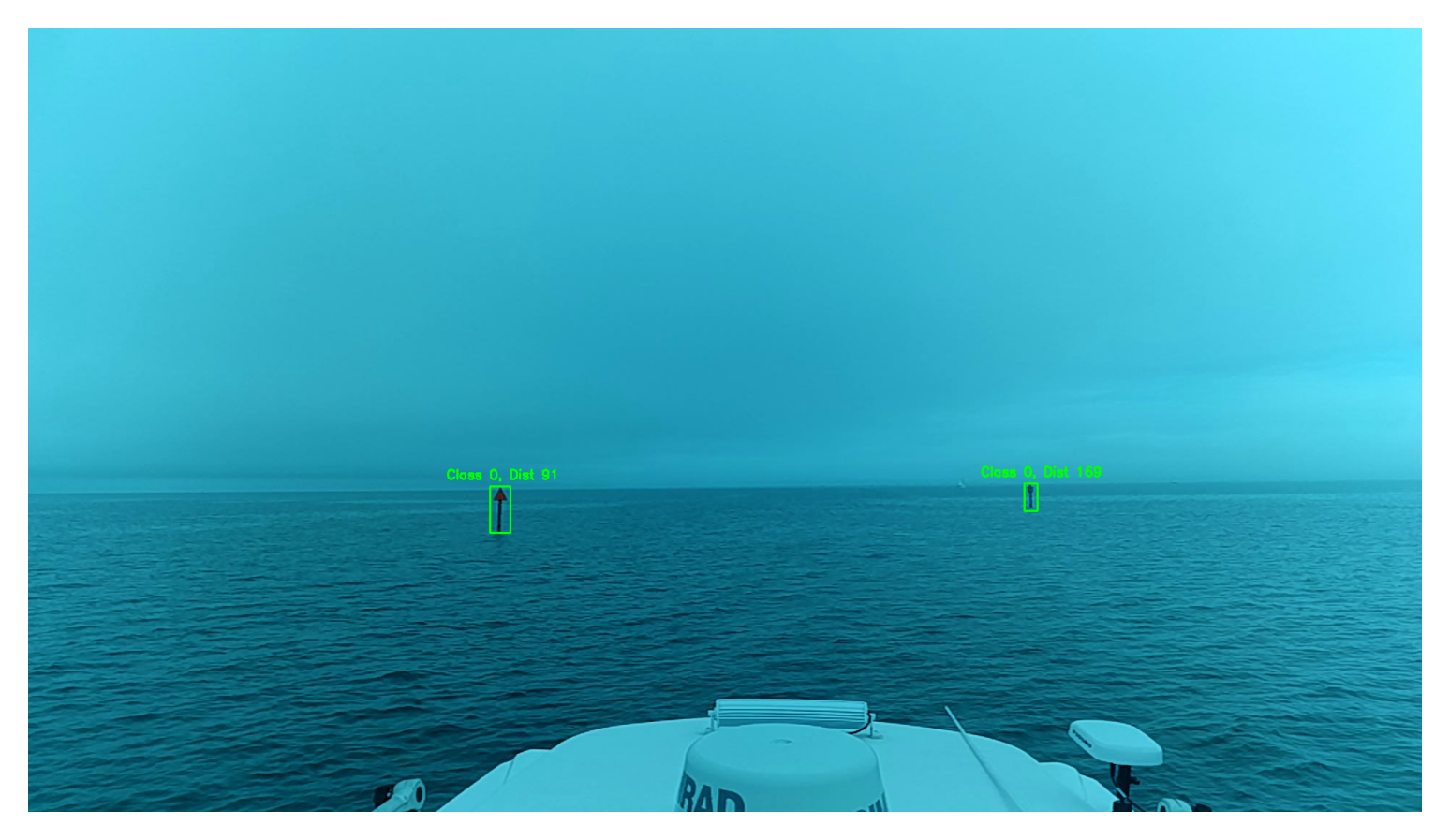

The dataset consists of approximately 3000 images of maritime navigational aids, primarily red and green buoy markers. Only the training set is provided; the test set is withheld to establish a benchmark for all submitted models in the competition. Accurate distance ground truth values are included, calculated using the haversine distance between the camera's GPS coordinates for each frame and the mapped buoy locations. Some images contain objects at considerable distances, appearing as only a few pixels in the video feed. These challenging samples may affect the object detector's performance, potentially lowering the mAP metric. Since the challenge evaluation includes object detection criteria (such as mAP), you may choose to exclude such samples from the training set if desired.

The submitted models are evaluated on a test set that is not publically available.

Two different metrics are employed to access the models performance regarding object detection as well as distance estimation:

The weighted Distance Error Metric is defined as follows: $$\varepsilon_{Dist} = \sum_{i=1}^n \frac{c_i}{\sum_{j=1}^n c_j} \frac{|d_i - \hat{d}_i|}{d_i}$$ where we compute the relative weighted distance error by first computing the absolute error, dividing this by the ground truth value and then multiplying the relative error with the normalized confidence. Since predictions for distant objects typically show greater deviation from the ground truth, we aim to penalize smaller errors for closer objects as well.

To evaluate the object detection performance as well as the distance error the final, combined metric is specifies as follows: $$\text{Combined Metric} = \text{mAP@[0.5:0.95]} \cdot (1- \min(\varepsilon_{Dist}, 1))$$ The final challenge placements are determined based on this score.

To participate in the challenge follow these steps:

To help you get started and provide a brief introduction to the topic, we have developed an adapted YOLOv7 object detector that can also make distance predictions. You can find the code here.

In order to submit your model you must first export it to ONNX format. A Python script is provided for this in the starter code repository for the adapted YOLOv7 model including distance estimation. Please be aware that modifications to this script may be necessary, depending on the structure of your model.

The submitted ONNX files must meet the following requirements:

If you have any questions, please join the MaCVi Support forum.