Download the MODS dataset on the dataset page. Train your model on external data (e.g. using MaSTr1325). Upload a zip file with results and predictions on the upload page.

The MODS Benchmark is hosted here as part of the upcoming MaCVi '23 Workshop. The goal of MODS is to benchmark segmentation-based and detection-based obstacle detection methods for the maritime domain, specifically for use in unmanned surface vehicles (USVs). It contains 94 maritime sequences captured using a radio-controlled USV. Instead of using general segmentation metrics, MODS scores models using USV-oriented metrics, that focus on the obstacle detection capabilities of methods.

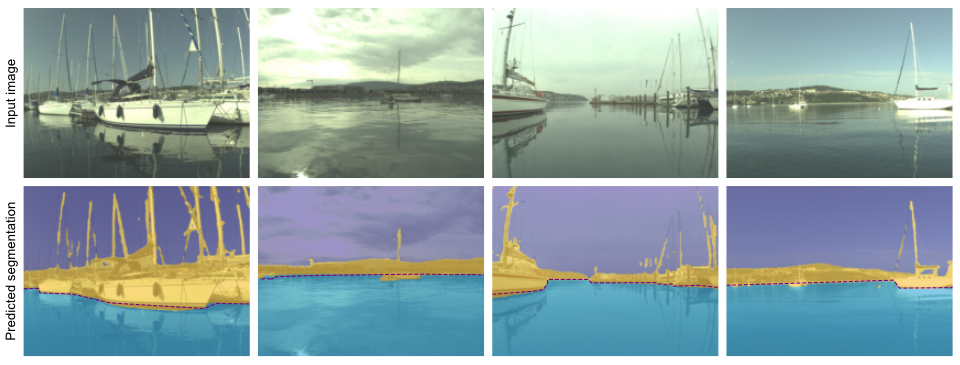

For the obstacles segmentation track, your task is to develop a semantic segmentation method that classifies the pixels in a given image into one of three classes: sky, water or obstacle. The MaSTr1325 dataset was created specifically for this purpose and we suggest using it to train you models. For an example of training on MaSTr1325 and prediction on the MODS dataset see WaSR.

Create a semantic segmentation method that classifies the pixels in a given image into one of three classes: sky, water or obstacle. An obstacle is everything that the USV can crash into or that it should avoid (e.g. boats, swimmers, land, buoys).

MODS consists of 94 maritime sequences totalling approximately 8000 annotated frames with over 60k annotated objects. It consists of annotations for two different types of obstacles: i) dynamic obstacles, which are objects floating in the water (e.g. boats, buoys, swimmers) and ii) static obstacles, which are all remaining obstacle regions (e.g. shoreline, piers). Dynamic obstacles are annotated using bounding boxes. For static obstacles, MODS labels the boundary between static obstacles and water annotated as polylines.

MODS evaluation protocol is designed to score the predictions in a way meaningful for practical USV navigation. Methods are evaluated in terms of:

From the perspective of USV navigation, prediction errors closer to the boat are more dangerous than errors farther away. To account for this, MODS also separately evaluates the dynamic obstacle detection performance (PrD, ReD, F1D) within a 15m large radial area in front the boat (i.e. danger zone).

To determine the winner of the challenge, the average of F1 and F1D scores will be used as an overall measure of quality of the method. In case of a tie μA will be considered.

Furthermore, we require every participant to submit information on the speed of their method measured in frames per second (FPS). Please also indicate the hardware that you used. Lastly, you should indicate which data sets (also for pretraining) you used during training and whether you used meta data.

To participate in the challenge you can perform the following steps:

python modb_evaluation.py --config-file configs/mods.yaml <method_name>

results_<method_name>.json and results_<method_name>_overlap.json.

results.json: this is the results_<method_name>.json file from the

results directory. Note that you have to rename the file.predictions: directory containing the predicted segmentation masks of your method on MODS

code: directory containing the code of your method. Include all the code files your method

requires to runresults.json

code

├── net.py

└── ...

predictions

├── kope100-00006790-00007090

│ ├── 00006790L.png

│ └── 00006800L.png

│ └── ...

├── ...

├── stru02-00118250-00118800

│ ├── 00118250L.png

│ ├── 00118260L.png