1st Workshop on Maritime Computer Vision (MaCVi)

SeaDronesSee v2 - Object Detection

TLDR

Download the SeaDronesSee Object Detection v2 dataset on the

dataset page. Upload a

json-prediction on the test set on the

upload page.

Overview

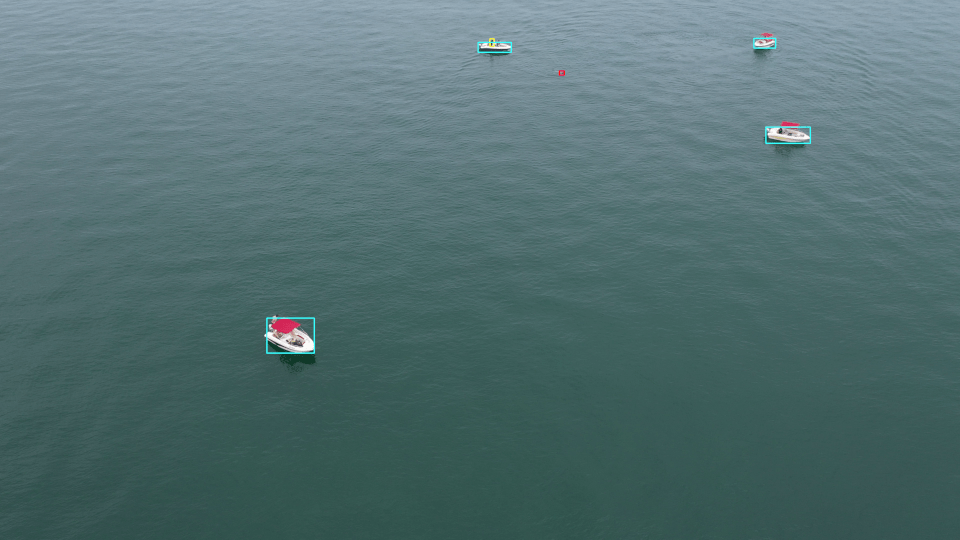

The SeaDronesSee benchmark was recently

published in WACV 2022. The goal of this benchmark is to

advance computer vision algorithms in Search and Rescue missions. It is

aimed at detecting humans, boats and other objects in open

water. See the

Explore page for some annotation examples.

The task of object detection in maritime search and rescue is far from solved. For example, the best performing

model of the SeaDronesSee object detection track currently

only achieves 36% mAP. Compare that to the common

COCO benchmark with the best performer achieving over

60% mAP. For the challenge of the workshop, we are going to make

a few changes from the original SeaDronesSee object detection benchmark.

In addition to the already publicly available

data, we collected further training data, which is included at the start of the challenge. In particular, we extend

the

object detection track of SeaDronesSee by almost 9K newly captured images depicting the sea surface from the

viewpoint

of a UAV.

The task is to detect the classes

swimmer, boat, jetski, buoy, life saving appliance (life jacket/lifebelt). The

ground truth bounding boxes are available and the

evaluation protocol is based on the standard mean average precision. Owing to the application scenario, we

offer an additional sub-track where only class-agnostic

models are evaluated which resembles the use-case

of detecting anything that is not water. Furthermore,

we require participants to upload information on their

used hardware and runtime.

Task

Create an object detector that, given an image, outputs bounding boxes for the classes of interest. The bounding boxes

are rectangular axis-aligned boxes.

There are two subtracks:

1. Standard Object Detection: In the first subtrack, you

should localize and classify all annotated classes as given below.

2. Binary Object Detection: In the second

subtrack, you should localize and classify objects in a binary fashion: water and non-water.

Your submission to the first subtrack will automatically be evaluated on the second subtrack by merging all your

predicted classes to a single class "non-water". However, you are encouraged to specifically train and submit a model on

the second subtrack since

you might improve the accuracy for the (possibly simpler) binary prediction task.

Dataset

There are 14,227 RGB images for the task of object detection (training: 8,930; validation: 1,547;

testing: 3,750).

The images are captured from various altitudes and viewing angles ranging

from 5 to 260 meters and 0 to 90° degrees while providing the respective meta information for altitude, viewing

angle and other meta data for almost all frames,

which are of 3840x2160, 5456x3632 and 1229x934 resolution. Each image is annotated with labels for the classes

swimmer, boat, jetski, buoy, life saving appliance (life jacket/lifebelt)

Additionally, there is an

ignore class. This region contains difficult to label or ambiguous objects. We

blackened out these regions

in the images already.

We provide meta data for most of the images. However, there is some number of images not containing any meta labels.

Although the

bounding box annotations for the test set are withheld, the

meta data labels for the test set are available

here.

Evaluation Metrics

We evaluate your predictions on the commonly used AP, AP

50, AP

75, AR

1 and

AR

10.

We use the commonly used COCO evaluation protocol. You can see the

od.py protocol on our

Github.

For the first subtrack, we average the AP results over all classes. For the second subtrack, we only have a single

class.

The determining metric for winning will be

AP. In case of a draw,

AP50 counts.

Furthermore, we require every participant to submit information on the speed of their method measured in frames per

second walltime.

Please also indicate the hardware that you used. Lastly, you should indicate which data sets (also for pretraining) you

used during training

and whether you used meta data.

Participate

In order to participate, you can perform the following steps:

- Download the dataset SeaDronesSee Object Detection v2 (not the old one!) on the dataset

page.

- Visualize the bounding boxes using these

Python scripts or Google

colabs. You should

adapt the paths for that.

- Train an object detector of your choice on the dataset. Optimally, you train a model for each of the stated

subtracks individually.

We provide you with sample object

detection training pipelines on Git.

- Create a json-file in COCO style that are the predictions on the test set (see also the dataset page for more details).

Find a sample submission file for the standard object detection here. For the binary track,

download a sample file here.

- Upload your json-file along with all the required information in the form here. You need

to register first.

Make sur you choose the proper track (Object Detection v2 or Binary Object Detection v2).

- If you provided a valid file and sufficient information, you should see your method on the leaderboard. Now,

you can reiterate. You may upload

at most three times per day (independent of the challenge track).

Terms and Conditions

- Submissions must be made before the deadline as listed on the

dates page

- You may submit at most 3 times per day independent of the challenge

- The winners are determined by the AP metric (AP50 in case of tie)

- You are allowed to use any publicly available data for training but you must list them at the time of upload.

This also applies to pretraining.

- Note that we (as organizers) may upload models for this challenge BUT we do not compete for a winning position

(i.e. our models do not count on the leaderboard and merely serve as references). Thus, if your method is worse

(in any metric)

than one of the organizer's, you are still encouraged to submit your method as you might win.

In case of any questions regarding the challenge modalities or uploads, please direct them to

benjamin.kiefer@uni-tuebingen.de.